Amazon EKS Upgrade Journey From 1.25 to 1.26 (Electrifying!)

We are now welcoming “Electrifying”. Process and considerations while upgrading EKS control-plane to version 1.26.

Overview

The theme for Kubernetes v1.26 is Electrifying.

Kubernetes team has again released a great result and Electrifying result. Team recognises the importance of all these building blocks on which Kubernetes is developed and used, while at the same time raising awareness on the importance of taking the energy consumption footprint into account: environmental sustainability is an inescapable concern of creators and users of any software solution, and the environmental footprint of software, like Kubernetes, an area which we all believe will play a significant role in future releases.

There is a couple of major themes that come with this release. Many of them actually won’t be available in EKS as alpha and betas are no longer supported.

Previous Stories and Upgrades

If you are looking at

- upgrading EKS from 1.24 to 1.25 check out this story

- upgrading EKS from 1.23 to 1.24 check out this story

- upgrading EKS from 1.22 to 1.23 check out this story

- upgrading EKS from 1.21 to 1.22 check out this story

- upgrading EKS from 1.20 to 1.21 check out this story

Kubernetes 1.26 Major updates

Change in container image registry

Well known and finally delivered change of google registries. It is allowing the spread of the load across multiple Cloud Providers and Regions, a change that reduced the reliance on a single entity and provided a faster download experience for a large number of users.

This release of Kubernetes is the first that is exclusively published in the new registry.k8s.io container image registry. In the (now legacy) k8s.gcr.io image registry, no container images tags for v1.26 will be published, and only tags from releases before v1.26 will continue to be updated.

CRI v1alpha2 removed

With the adoption of the Container Runtime Interface (CRI) and the removal of dockershim in v1.24, the CRI is the only supported and documented way through which Kubernetes interacts with different container runtimes. Each kubelet negotiates which version of CRI to use with the container runtime on that node. Kubernetes v1.26 drops support for CRI v1alpha2. That removal will result in the kubelet not registering the node if the container runtime doesn't support CRI v1.

By default, Amazon Linux and Bottlerocket AMIs include containerd version 1.6.6. I use Amazon Linux nodes so I didn’t have to upgrade anything. Minimum version that you will require is 1.6.0.

Storage improvements

Following the GA of the core Container Storage Interface (CSI) Migration feature in the previous release, CSI migration is an on-going effort that we’ve been working on for a few releases now, and this release continues to add (and remove) features aligned with the migration’s goals, as well as other improvements to Kubernetes storage.

Delegate FSGroup to CSI Driver graduated to stable

This feature allows Kubernetes to supply the pod’s fsGroup to the CSI driver when a volume is mounted so that the driver can utilise mount options to control volume permissions. Previously, the kubelet would always apply the fsGroupownership and permission change to files in the volume according to the policy specified in the Pod's .spec.securityContext.fsGroupChangePolicy field. Starting with this release, CSI drivers have the option to apply the fsGroup settings during attach or mount time of the volumes.

Support for Windows privileged containers graduates to stable

Privileged container support allows containers to run with similar access to the host as processes that run on the host directly. Support for this feature in Windows nodes, called HostProcess containers, will now graduate to Stable, enabling access to host resources from privileged containers.

Feature metrics are now available

Feature metrics are now available for each Kubernetes component, making it possible to track whether each active feature gate is enabled by checking the component’s metric endpoint for kubernetes_feature_enabled.

Retired API versions and features

- apiserver.k8s.io/v1beta1 API version. Among the API versions that were removed in 1.26 is the flowcontrol.apiserver.k8s.io/v1beta1 API, which was marked as deprecated in v1.23. This API version is related to Kubernetes’ API priority and fairness feature. If you are using the v1beta1 API version of FlowSchema and PriorityLevelConfiguration resources, update your Kubernetes manifests and API clients to use the newer flowcontrol.apiserver.k8s.io/v1beta2 API version instead. If you are unsure whether you are using the version being retired, run the following command:

kubectl get flowschema,prioritylevelconfiguration --all-namespaces | grep v1beta1- autoscaling/v2beta2 API version. Among the API versions that were removed in Kubernetes v1.26 is the autoscaling/v2beta2 API, which was marked as deprecated in v1.23. If you are currently using the autoscaling/v2beta2 API version of the HorizontalPodAutoscaler, update your applications to use the autoscaling/v2 API version instead. If you are unsure whether you are using the version being retired, run the following command:

kubectl get hpa --all-namespaces | grep v2beta2In Kubernetes deprecating a feature or flag means that it will be gradually phased out and eventually removed in a future version, and there were a lot of deprecations in Kubernetes version 1.26. For a complete list, refer to all Deprecations and removals in Kubernetes v1.26.

Important!

Before you upgrade to Kubernetes 1.26, upgrade your Amazon VPC CNI plugin for Kubernetes to version 1.12 or later. If you don't upgrade to Kubernetes 1.26, earlier versions of the Amazon VPC CNI plugin for Kubernetes crash.

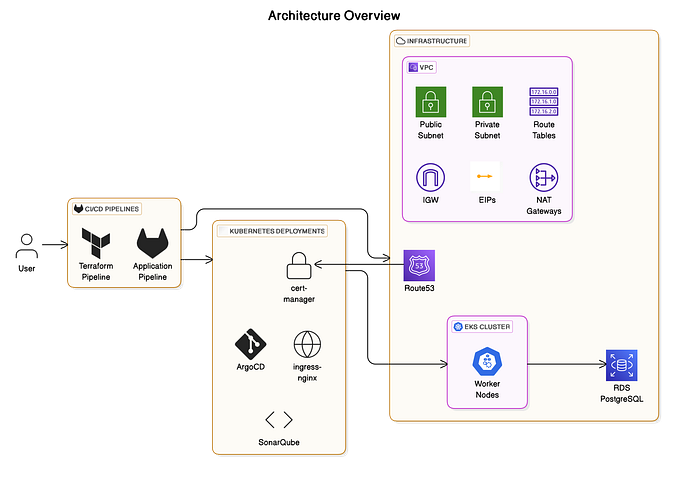

Upgrade your EKS with terraform

This time upgrade of the control plane takes around ~10 minutes and didn’t cause any issues. AWS are doing a great job at reducing the time it takes to upgrade EKS control plane.

I immediately upgraded worker nodes which took around 10–20 minutes to join the upgraded EKS cluster. This time is dependent on how many worker nodes you have and how many pods need to be drained from old nodes.

In general full upgrade process controlplane + worker nodes took around ~22 mins. Really good time I would say.

I personally use Terraform to deploy and upgrade my EKS clusters. Here is an example of the EKS cluster resource.

resource "aws_eks_cluster" "cluster" {

enabled_cluster_log_types = ["audit"]

name = local.name_prefix

role_arn = aws_iam_role.cluster.arn

version = "1.26"

vpc_config {

subnet_ids = flatten([module.vpc.public_subnets, module.vpc.private_subnets])

security_group_ids = []

endpoint_private_access = "true"

endpoint_public_access = "true"

}

encryption_config {

resources = ["secrets"]

provider {

key_arn = module.kms-eks.key_arn

}

}

tags = var.tags

}For worker nodes I have used official AMI with id: ami-0cc39b918c393fdb7. I didn’t notice any issues after rotating all nodes. Nodes are running following version: v1.26.2-eks-a59e1f0

Templates I use for creating EKS clusters using Terraform can be found in my Github repository reachable under https://github.com/marcincuber/eks/tree/master/terraform-aws

Please note that I have noticed that after EKS upgrade, API server was not reachable for about 45 seconds. Requests eventually were handled after that.

Upgrading Managed EKS Add-ons

In this case the change is trivial and works fine, simply update the version of the add-on. In my case, from this release I utilise kube-proxy, coreDNS and ebs-csi-driver.

Terraform resources for add-ons

resource "aws_eks_addon" "kube_proxy" {

cluster_name = aws_eks_cluster.cluster[0].name

addon_name = "kube-proxy"

addon_version = "1.26.2-eksbuild.1"

resolve_conflicts = "OVERWRITE"

}resource "aws_eks_addon" "core_dns" {

cluster_name = aws_eks_cluster.cluster[0].name

addon_name = "coredns"

addon_version = "v1.9.3-eksbuild.2"

resolve_conflicts = "OVERWRITE"

}resource "aws_eks_addon" "aws_ebs_csi_driver" {

cluster_name = aws_eks_cluster.cluster[0].name

addon_name = "aws-ebs-csi-driver"

addon_version = "v1.17.0-eksbuild.1"

resolve_conflicts = "OVERWRITE"

}After upgrading EKS control-plane

Remember to upgrade core deployments and daemon sets that are recommended for EKS 1.26.

- CoreDNS — v1.9.3-eksbuild.2

- Kube-proxy — 1.26.2-eksbuild.1

- VPC CNI — 1.12.2-eksbuild.1

- aws-ebs-csi-driver- v1.17.0-eksbuild.1

The above is just a recommendation from AWS. You should look at upgrading all your components to match the 1.26 Kubernetes version. They could include:

- calico-node

- cluster-autoscaler or Karpenter

- kube-state-metrics

- metrics-server

- csi-secrets-store

- calico-typha and calico-typha-horizontal-autoscaler

- reloader

Summary and Conclusions

Super quick and easy controlplane upgrade to version 1.26 of Kubrenetes. In 10 mins the task was completed. I use terraform to run upgrades so pipeline made my life easy.

Yet again, no significant issues. Hope you will have the same easy job to perform. All workloads worked just fine. I didn’t have to modify anything really.

If you are interested in the entire terraform setup for EKS, you can find it on my GitHub -> https://github.com/marcincuber/eks/tree/master/terraform-aws

Hope this article nicely aggregates all the important information around upgrading EKS to version 1.26 and it will help people speed up their task.

Long story short, you hate and/or you love Kubernetes but you still use it ;).

Enjoy Kubernetes!!!

Sponsor Me

Like with any other story on Medium written by me, I performed the tasks documented. This is my own research and issues I have encountered.

Thanks for reading everybody. Marcin Cuber